Agile Development, Continuous Integration (CI) and Continuous Delivery (CD) have become more than just buzzwords. They are key success factors in software industry. The idea is, to have a software pipeline covering all stages from concept to deployment while ensuring repeatability and reliability in order to enable frequent releases with constant feedback. This concept is a perfect fit for the increasing demands on cost and quality in automotive software development.

There is a catch of course. When I first got in contact with software development in the automotive domain I was frustrated to see how slow things were moving. I really needed some time getting used to the fact that the diverse landscape of tools and processes and the extensive dependencies to hardware make the transition to CD extremely complex in automotive (compared to other domains like app or web development). Additionally, developers in automotive often got the feeling that testing was slowing them down.

The following three aspects explain best practices for efficiently integrating your testing activities into an automated development process while connecting modern CD principles with automotive and model-based development.

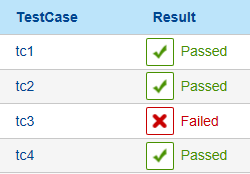

1. Define automatic verdicts for your tests

I still see a lot of cases where a test is performed like this:

- Specify some inputs for the SUT (System Under Test)

- Simulate the SUT with these inputs

- Inspect the outputs

- Manually decide on passed or failed

Usually this process is done because of missing requirements or a combination of extremely low-level requirements for algorithms which realize complex mathematical calculations. Especially Model-based Development with Matlab/Simulink makes it tempting to implement a rather manual and interactive test workflow. The problem with the approach described above is that it requires manual effort to choose a verdict (passed/failed). Like this, with increasingly frequent iterations the manual effort increases as well. In addition, the risk of human error is very high with the developer having to repeat the same task over and over (with the pressure of the project deadline). In general, this approach is not transparent and does just not scale at all, thereby counteracting the benefits you want to achieve from your CI/CD process.

How to solve this? In addition to the inputs that you specify, your Requirements-based test cases should always contain information about the desired output behavior of the SUT coming from the requirements, either in the form of expected values (usually considering tolerances), formulas or even scripts which analyze the simulated output. From this point on, your requirements-based test cases are very low-maintenance. In general, they only have to be adapted if the related requirements change or if structural changes of the SUT impact the interface. Having an automatic verdict for all of your tests is vital for efficiency and highly increases the transparency while eliminating the chances for human error. In addition, automatic verdicts ensure scalability with iterations becoming more and more frequent.

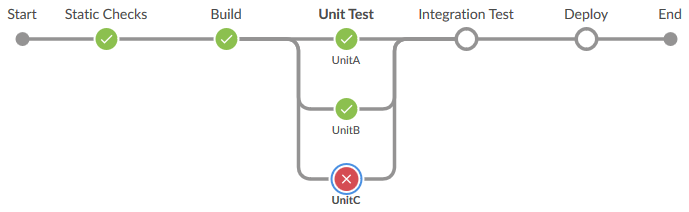

2. Failed Tests should impact your process

What becomes apparent is that the idea of a failed test is associated with bad feelings. Often the only reaction to failed test cases is that the failure appears in a report. Downstream steps sometimes continue as if nothing happened because of the pressure of producing a deliverable piece of software:

“We can still judge if the failed test case really is an issue later on.”

This approach which is motivated by the belief that delivering something is better than delivering nothing ignores a few facts, especially regarding unit tests:

- The bulk of the resource and execution time intensive part comes after the unit tests (Hardware-in-the-Loop, Prototypes, etc.)

- A fix can reveal other defects or introduce regressions.

- The sooner you fix an issue the cheaper it is to do so. Since your unit tests should finish very soon after introducing a change, applying a fix immediately does not cost much time.

- Debugging is easier for a small number of issues. Waiting until a lot goes wrong will make it extremely hard to isolate individual issues.

With the Fail Fast Principle in mind, developers, testers, team leaders and managers should aim for a paradigm shift: Test failures are good and they should stop your pipeline.

Regardless of standards which require certain test activities, you should embrace not only testing itself but also failed tests. Why? Because they prove that your quality assurance process works. Try to convince an auditor that your process is solid if you cannot show him any failed tests – not that easy, right? Furthermore, each run that was blocked by failed tests saves valuable resources and time which would otherwise be wasted and prevents costly and highly damaging recalls.

For those of you who still struggle with the thought of stopping a precious pipeline run: Don’t worry. Stopping the pipeline does not mean giving up. See the next section for how to deal with failures.

3. Have a next-step plan for failures

Often software development pipelines (not only automotive) are optimized for the happy path. To show that the pipeline manages to transfer a change into a new deliverable of the software is a natural first step when setting up an automated process. If something fails along the line it is treated as an exception.

However, as we have established above, test failures are to be expected. The definition of a successfully implemented software delivery pipeline is not that it produces a deliverable for every change but that it produces a deliverable for every change that passed all tests. Since failed tests should block the pipeline, an important aspect towards efficiency is to prepare everything that developers and testers need to know in order to react on failures. This includes:

- Links to requirements

- Reports, Logs, Stack Traces

- Data for debugging (SUT + test cases)

Having the next steps prepared enables your teams to fix broken builds immediately which is a vital part of the CD concept. Developers should not add new functionality before the existing features meet the expected quality.

Conclusion

The idea of testing activities – especially unit testing – must shift from being a hassle to being a valuable resource. In order to achieve this, you should aim for the highest possible degree of automation which includes automated passed-failed criteria of your tests and the automated preparation of analysis- and debugging environments. With these things handled there is no reason to fear a failed test: your pipeline will stop, saving valuable resources and at the same time providing all you need to identify the cause of the issue.