The Journey – from idea to solution

It seems like every day more and more software teams are developing software in a model-based way, using Simulink models and auto-generated c-code… and Ford is no different. Many low-level math and interpolation routines have been developed by the central software team as libraries that can be used by different production teams. In order to fulfill specific requirements on the code side, the c-code for these routines is hand-coded and the Code Replacement Library (CRL) can be used in model-based development to integrate the handwritten code files during the code generation process.

The problem with this process: even for simple algorithms, it’s likely that the behavior on the model and code level aren’t 100% match. This leads to errors down the line that can be a nightmare to analyze.

This journey takes us from the initial objective to a solid and automated test workflow. A journey is rarely straightforward from start to finish. But at the end of the day, the detours, hurdles, and discarded approaches find us appreciating the maturity and simplicity of the final result even more.

Challenge #1: Catch Discrepancies

Due to the hand-coded core algorithm, the MIL behavior (Simulink blocks) and the SIL behavior (hand coded function) can differ quite a lot.

->We need to test if model and code behavior are aligned!

Challenge #2: Create Tests

For each function, we need a set of appropriate test cases which give us the needed confidence in the correct functionality. Interface coverage and structural MC/DC coverage were defined as completeness criteria.

->We need a solution to systematically generate the needed tests using a fully automated process.

Challenge #3: Make it Scale

There’s high number of libraries which need to be tested in multiple Matlab versions. Any manual work is error prone and this would create a massive effort.

->The degree of automation must be as high as possible, otherwise the effort will be immense.

Challenge #4: Collaboration Effort

The developed test models and verification and coverage results need to be reviewed by broad team of code developers, MBD testers and quality engineers to verify and reconcile differences.

->Solution must utilize state of the art of continuous integration development process.

Take 1: Anything can be scripted!

Mohammad Kurdi and his team already had a lot of scripting in place to create the Simulink models for each library routine and hook it up with the correct handwritten c-file. Therefore, it was a natural next step to also write the logic that creates test cases for the desired input combinations and use those for a back-to-back test MIL vs. SIL.

However, after some initial success this approach proved very demanding. A lot of special corner cases and advanced scenarios needed to be handled. The effort for an algorithm that calculates the desired input combinations was acceptable but in addition, implementing simulation on MIL and SIL, handling tolerances and integrating tools for coverage measurement, etc. would add a huge overhead.

The scripting effort would indeed get out of hand. It is not a trivial task to write a test tool with a bunch of scripts, even when focusing on a single use case. What is more: in addition to the m-scripts, a Jenkins Pipeline script will eventually be required.

Take 2: Semi-Automatic

It’s common to find teams at Ford using BTC EmbeddedTester for MIL/SIL tests of their software. Also, the team that writes the hand-coded implementations for these standardized libraries performs their requirements-based testing with BTC.

So, the next approach was to bring the script-generated vectors for the input combinations into BTC and perform the respective test steps there:

- Matlab

- Run script to generate input combination vectors (produces CSV files)

- BTC:

- Create test project that knows about the model, the hand-written code and how they relate to each other

- Import CSV files (input combinations and manual vectors)

- Run Back-to-Back test MIL vs. SIL (MIL runs via Matlab)

- Create reports (B2B report, Coverage Report)

After some initial work, Thabo Krick from BTC became involved and proposed to automate the process via Jenkins. After all, the process was still far from perfect because:

- Each test run generated a number of reports for each model. Having hundreds of these models, it was not possible to get a decent overview.

- Also, the coverage reports showed that – even on these rather simple libraries – the script-based input combinations often didn’t cover all parts of the code.

- Automating the process was challenging because we needed to talk to Matlab (m-script to generate the input combinations) and BTC (testing on MIL and SIL), while exchanging data between both tools.

Take 3: Full Throttle

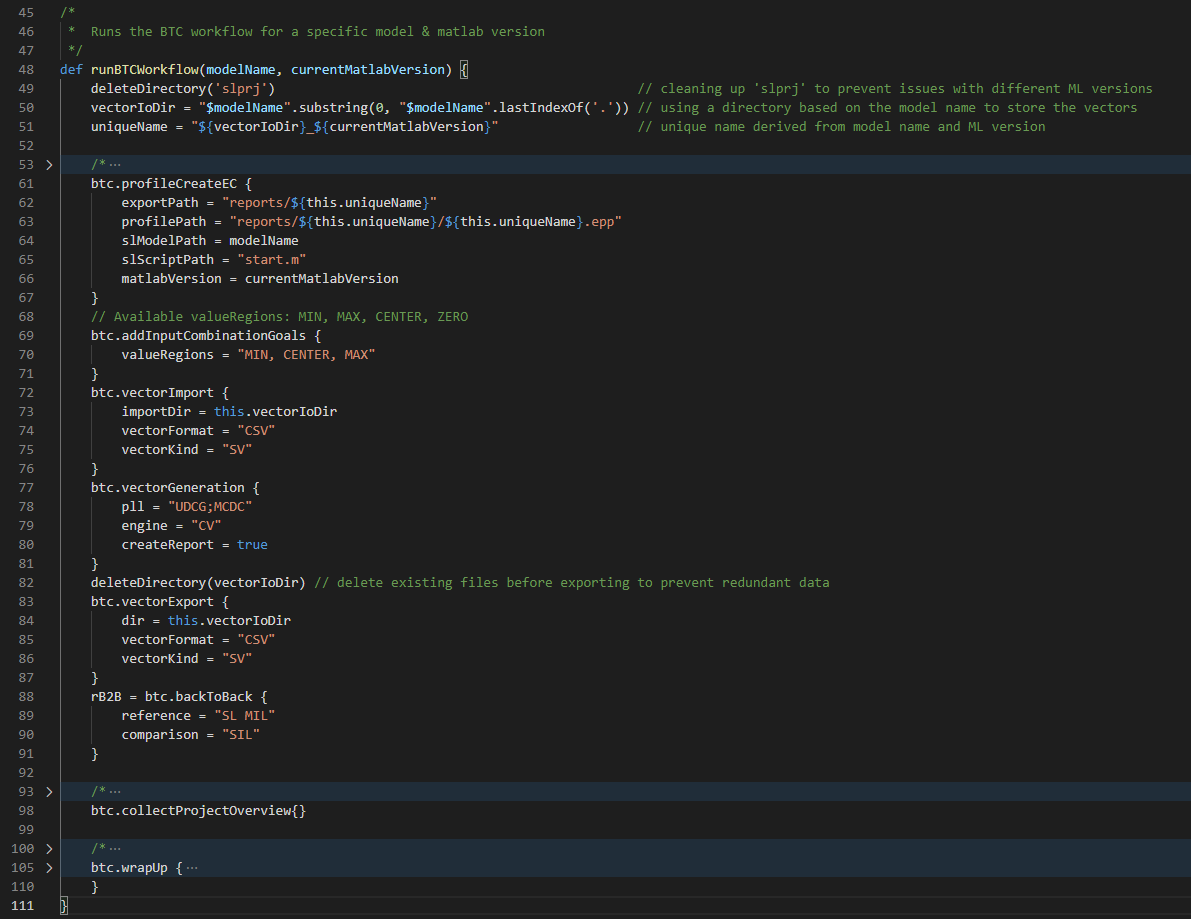

The discussions that followed produced a workflow, which was fully automated in a Jenkins Pipeline purely relying on the BTC Pipeline steps.

Instead of a script-based approach to generate input combinations, BTC’s User-defined Coverage Goals feature (UDCG) can be used. Based on the available interface, UDCGs allow us to define a Boolean expression describing a state. The stimuli vector generation capabilities in BTC EmbeddedPlatform mathematically will ensure these goals are met. The BTC Jenkins plug-in provides a function that dramatically simplifies the expression of all desired input combinations.

Manually created test cases could optionally be included but are not essential for the workflow. Any gaps would be filled by the automatic test generation, configured to handle the desired input combinations (cover the User-defined Coverage Goals) and achieve 100% MCDC coverage.

Since each model was tested multiple times (for each Matlab version), the Pipeline contained two nested for-loops designed to reuse generated vectors to reduce the overall runtime.

- For each Matlab version

- For each model in the repo

- Create Test Project

- includes auto-code generation with integrated hand-code via CRL

- analyzes the model and code structure and the mapping between model and code interfaces (ports <-> variables, etc.)

- creates an annotated copy of the code for coverage measurement

- Create Input Combinations (e.g., min/max/center) via UDCG

- [optional] Re-use existing test cases

- Generate vectors for input combinations & MCDC

- Run Back-to-Back MIL vs. SIL with the selected Matlab version

- Create Report

- Create Test Project

- Create Overview Report

- For each model in the repo

Fully automated Jenkins Pipeline

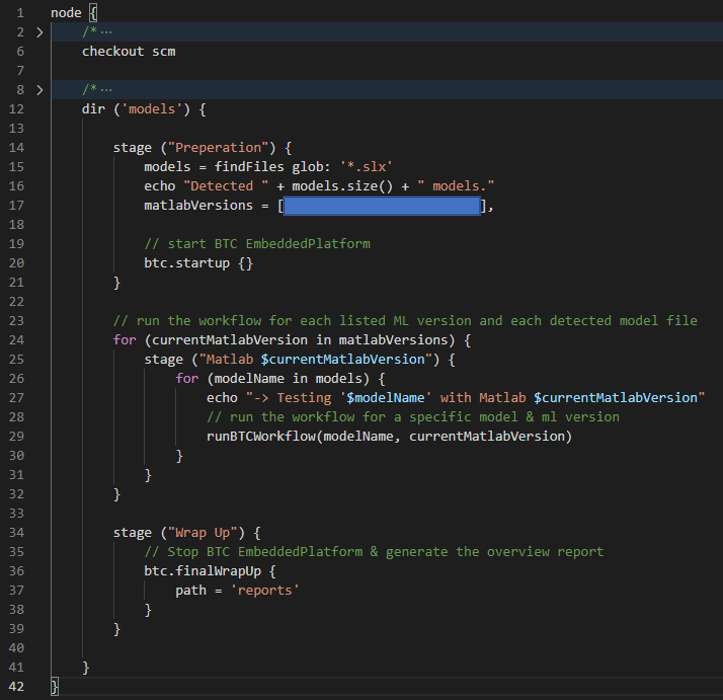

The resulting Pipeline was surprisingly simple: it checks out the repository, detects the models based on their file extension and invokes the test workflow for each model and Matlab version.

To keep the Pipeline easy to read, the test workflow can be defined in a separate method. With the PLL “UDCG” (line 78), the input combinations added above (line 69-71, as previously mentioned) are considered for the automatic test generation, alongside MCDC.

The following image shows us how the Pipeline was applied to different categories of library routines which made it easy to identify the areas that required further attention.

Reporting

An overview report can be generated to serve as the entry point to quickly see the overall status. It’s a high-level view with an aggregated PASSED / FAILED result and lists each project including its result, links to project-level report and to the project file for direct on-demand access to more details.For each combination of model <-> Matlab version, the project-level report lists the performed steps and results and links to further details.

Summary

The quality improvement on the library routines pays off, since it reduces the analysis and debugging effort for the production teams. Since the tested library routines can be used in most production models, there is tremendous benefit enjoyed from the effort of optimizing this process, both from the standpoint of reduced effort and improved software quality.

The project really took off after introducing the User-defined Coverage Goals in combination with the automatic test generation and back-to-back test. No manual steps are involved in the proposed test workflow which allows it to scale easily for larger projects if needed in future. It’s also worth noting that when new Matlab are adopted, it will be possible to add new Matlab versions in a matter of seconds with a quick tweak to the script.

We would also like to give additional thanks to Mohammad Kurdi for presenting this approach at the Ford-internal Software Quality Tech Day in May 2021.